Not Your Grandpa’s Talking Animals: How Animators Kept ‘The Jungle Book’ Real

Much has been made of the innovative virtual filmmaking techniques used to create Jon Favreau’s The Jungle Book. And while that aspect of the production was clearly a key part in helping a young actor, Neel Sethi (Mowgli), appear to interact with a range of cgi animals, the role of animators was also key in giving emotion to the jungle’s inhabitants by making them talk and at the same time making them completely believable.

Using the examples of Shere Khan the Bengal tiger (voiced by Idris Elba) and the wolf Raksha (Lupita Nyong’o) created by vfx house MPC, Cartoon Brew delves into the animation challenges of the film.

For all of the creatures it created—54 animal species in total—MPC began work on The Jungle Book with extensive referencing. The animation team gathered and organized a voluminous library of footage from nature documentaries and online videos. “When each shot was started,” outlined character animation supervisor Benjamin Jones, “the animators would search through the library and choose two or three clips that they thought matched the intention of the performance required. Often it would be one clip to get the body language and one clip for the face and head which looked like a real animal doing something close to the emotional performance that we were aiming for in the shot.”

MPC’s animators also crafted mood boards of images that represented individual components of the animals. “Narrowing down the reference to specific selects allowed our artists to have a more targeted approach to their work, as the sheer amount and variation in the reference could be intimidating or contradictory,” said Jones. “In the end we would have a pretty good set of go-to images for a character that showed the best reference for fur, model, texture, lighting, animation etc.”

What the artists would be animating started with accurate skeleton models. “On top of all that, we would simulate an accurate muscle system with automatic muscle flex detection based on movements of the joints, and a cloth-like simulation for the skin,” said Jones. “MPC’s pipeline allowed auto generation of all the character muscle and skin simulations for all active shots overnight when a rig update was made.”

Perhaps one of the most difficult challenges of the film, from an animation standpoint, came in the form of lip sync. The visual effects teams were tasked with not only creating photorealistic creatures but also making them talk—something that could easily have swayed things away from realism. MPC relied on both animal jaw reference and video of the voice actors performing the dialogue.

“As we wanted to keep the motion of the faces of the characters more animal than human, the face of the actor was used for timing reference of the mouth and eyes, and also a key part was to layer in some of the head motion from the voice actor on top of the animal reference. This subtle head motion really added a lot of personality from the individual actors into the animation.”

“Animals can be very expressive,” added Jones, “but not necessarily in the same way humans are. We tried to steer clear of as many human traits as possible and put a lot of the emotion into the body language such as ears and tail; the tail movement of animals can be a huge emotional cue. When it felt like the facial performance was getting too exaggerated or anthropomorphic we would back off on it and look at adding more cues to the body to get a more natural feel, but still keep the emotional core.”

Animals such as Shere Khan and Raksha would, of course, not come across as real without a convincing fur coat. MPC has years of experience in this area with its proprietary Furtility tool. Each wolf, for example, had in excess of 10 million hairs making the scenes very render-heavy.

“Our R&D team developed optimizations to the groom to reduce the level of detail as the characters get further from camera which allowed us to get the renders through,” noted Jones. “We utilized SideFX Software’s Houdini to simulate the fur, this allowed much more flexibility with what we could do with interaction in the fur. For the wolves in the rain, for example, we could simulate the effect of individual hairs of the fur getting hit by raindrops and springing back. The effects team would also use Houdini to simulate thousands of water particles in the fur that would drip off the tips of the fur, and run down the individual hairs.”

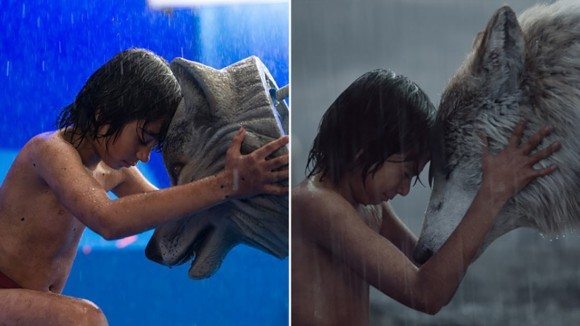

Sometimes the animals’ fur also had to interact with the environment or with Mowgli, such as a scene where the man-cub says goodbye to his pack and runs his hands through Raksha’s coat. The shot was filmed with a puppeteer (from The Jim Henson Company) controlling a practical puppet that Sethi interacted with on set. The puppet, specially manufactured from MPC’s digital character assets, was covered in a foam surface with 50% of the thickness of Raksha’s actual fur.

“This meant that when we replaced the puppet with our digital version,” continued Jones, “Neel’s hand would be just the right depth into the digital fur to get a good interaction and feeling of contact. We would track Neel’s hand and arm digitally and then use that to simulate collisions with the fur. To get the best lighting and shadow response possible for the hand that was buried in the fur we ended up blending to a digital hand.”

Placing their creatures in photoreal digital environments was another key step, and began with a significant real-world photographic texture shoot in India (by MPC’s Bangalore team). “This enabled our environments to be grounded in reality, and even more than that, allowed them to be directly influenced by actual environments in India,” said Jones. “Many of the rocks and trees were directly reconstructed using photogrammetry from these images. Our artists could then build the multiple massive environment sets by picking and choosing from a huge library of pre-existing rocks, plants, and trees.”

MPC added to these photographic reconstructions with custom pieces, too. “To bring the whole environment together we had a procedural tool to create ‘scatter’ on the entire environment,” said Jones. “This used a particle system to distribute sand, leaves, stones, small plants, mud, moss, and debris over the entire terrain, dynamically collecting in cracks and crevices of the environment automatically.”

The final creature count for MPC, which also crafted other major characters like Baloo the bear and Bagheera the black panther, was a whopping 224 variations on 54 animal species. It was an effort that also resulted in MPC producing 1,984 terabytes of data and running 240,000,000 render farm hours. And behind that work: more than 800 visual effects artists at MPC making it possible.