Digital Actors: Are They Ready For Their CG Close-ups?

The release of any new blockbuster film featuring a cg human always tends to ignite the debate about whether a completely believable living, breathing digital actor has been successfully created or if the character has dipped into the ‘Uncanny Valley.’

In several recent films, such digital actors have only tended to be seen in limited sequences, such as Grand Moff Tarkin and Princess Leia in Rogue One, Wolverine in Logan, or Rachael in Blade Runner 2049. There are also countless examples of digital-doubles of actors standing in for action scenes, or of cg face replacements, many of which audiences may not even be aware of.

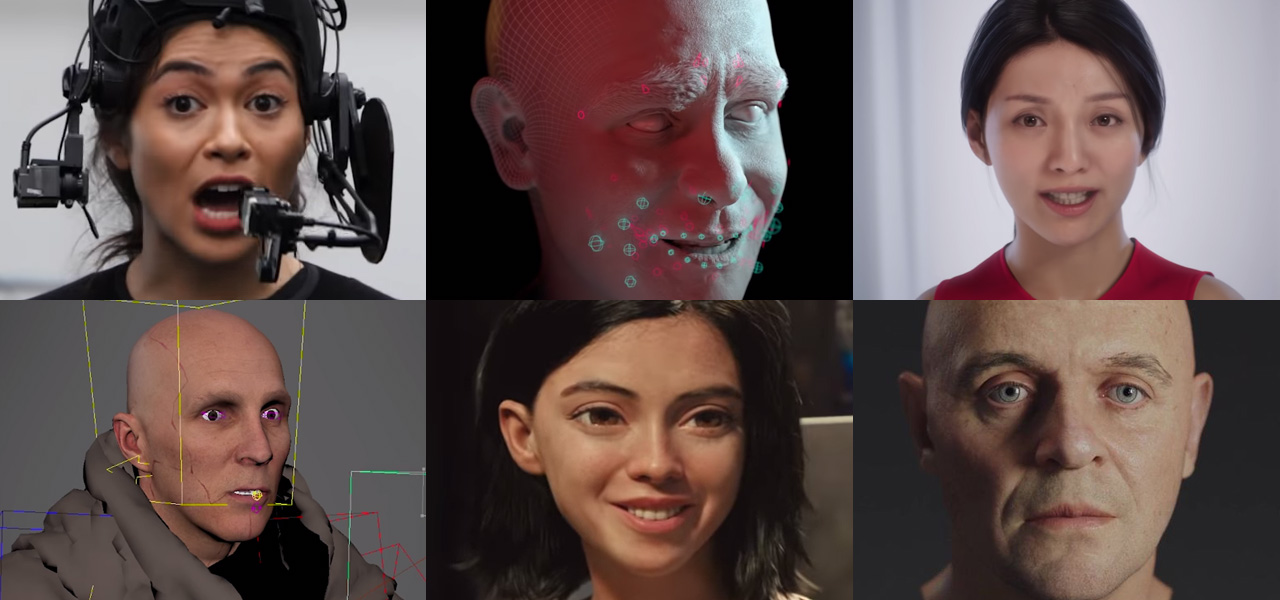

Now a new film, Alita: Battle Angel, has as its central character a completely computer-generated actor – i.e. she appears for the entire length of the film. Could more on-screen time be in the permanent future for digital actors? Cartoon Brew takes a look at how Battle Angel and a slew of upcoming films, as well as current commercials, television shows, and real-time projects have been pushing the boundaries of digital humans.

A digital Alita

The visual effects studio behind the cg Alita for Battle Angel is Weta Digital, no stranger to digital characters, including human and humanoid ones. Their work in resurrecting Paul Walker for Furious 7, after the actor died mid-production, is still considered a benchmark in realistic cg humans; indeed, many audience members would likely be unable to tell which shots are the real Walker and which ones are synthetic.

Alita was brought to life starting with the performance capture of actor Rosa Salazar. Weta Digital then, via its well-developed pipeline for mixing performance capture with keyframe animation, crafted Alita digitally. It’s important to note that the cyborg character is not strictly intended to be a one-to-one match to Salazar (Alita has larger than normal eyes, and some robotic body parts). But, she is human and the intention is for the audience to engage with her as a photoreal digital person. Certainly, in terms of the approach Weta Digital took to making her, it was as if she were a cg human.

In doing that work, Weta Digital stepped up its tools and techniques for digital actors. First, it implemented a new motion capture suit that included a stereo head-cam for facial capture, which aided in capturing more of the nuances of Salazar’s facial features, especially her mouth. The studio also, before translating the motion capture to the Alita model, actually did so first to a matching cg puppet of Salazar herself. This is something Weta Digital had also done in its work for Avengers: Infinity War’s Thanos, the aim being to ensure all of the actor’s movements were properly reflected in the cg model before moving over to the actual cg character. Audiences will be able to see the latest results when Battle Angel is released on February 14th.

Notable cg humans in tv and commercials

However, it’s not just big films where digital actors have been on show. In television series and commercials, digital doubles also make regular appearances, as do prominent cg humans. In season one of Westworld, vfx studio Important Looking Pirates was tasked with making a young Anthony Hopkins for a series of shots. They did so via a cg version of Hopkins made to look younger and then inserted onto a stand-in actor.

A recent John Lewis commercial re-created performances by Elton John over the years by utilizing a cg model of the singer. MPC was responsible for the work here (the studio had previously crafted a young digital Arnold Schwarzenegger in Terminator Genisys and a cg Rachael for Blade Runner 2049).

Major steps in real-time

In films and in tv and commercials, visual effects studios tend to have the luxury of off-line rendering firepower to realize their cg humans. It’s not the same for real-time projects, such as immersive entertainment or games, where on-the-fly rendering is required. However, massive developments in games engines such as Unreal Engine and Unity, coupled with real-time capture methods, have resulted in incredibly convincing digital humans.

For example, consider the characters in Neill Blomkamp’s Adam short from Oats Studios made with Unity. Or the ‘Siren’ project, in which Epic Games, 3Lateral, Cubic Motion, Tencent, and Vicon combined to deliver the realistic likeness of Chinese actress Bingjie Jiang. (Epic also recently acquired 3Lateral, so expect even more from them in this area).

Meanwhile, academic research in real-time methods to replicate digital humans is continuing at a rapid rate, and is often presented at conferences such as Siggraph. One researcher from the University of Southern California, Hao Li, is also CEO and co-founder of Pinscreen. That company has been developing what are essentially ‘instant’ 3D avatars from just one input photo in a mobile application, with the help of neural networks and years of research in replicating digital faces and hair.

What’s coming in the world of digital humans

Are we ‘there’ yet with photoreal cg actors? This year might be one of the biggest showcases of such effects work, with several projects being released.

A niche part of the field is the growing genre of digital human holograms, where deceased singers have been resurrected to be part of live performances. Roy Orbison received that treatment recently from BASE Hologram; that company is also now working on a show featuring a cg Amy Winehouse, who died in 2011.

Then there’s the Ang Lee film, Gemini Man, releasing in October. It will feature Will Smith taking on a younger version of himself, a task (not involving Smith) that Disney had done tests for years earlier when the project was at that studio. That may or may not involve a completely cg younger Smith, or use some of the de-ageing techniques similar to those that have seen young versions of Michael Douglas and Michelle Pfeiffer appear in Ant-Man and the Wasp, for example. Samuel L. Jackson is also getting that de-ageing treatment – reportedly for the entire film – in the upcoming Captain Marvel.

To deliver these cg humans, vfx studios are continuing to make advancements in facial capture, scanning, muscle and flesh simulations, and skin and hair rendering. There’s also been an adoption of machine learning techniques in this area. For example, Digital Domain – a studio deeply involved in digital humans since its work on several celebrity holograms and for The Curious Case of Benjamin Button – utilized trained data of human facial movement to help create their version of Thanos for Infinity War, and have been recently showcasing other machine learning R&D done at the studio in its Digital Human Group.

Clearly, recent projects have shown that vfx studios can bring cg actors to life in incredibly convincing ways (whether they should, ethically, is sometimes a matter for debate, especially in relation to deceased actors). Sustained performances offer more significant challenges because there is obviously more time to scrutinize what’s on screen, but it now seems that years of advancement in artistry and technology has reached a time for digital actors to truly take the stage.

.png)