A Virtual Production Explainer: What It Is, And What It Could Mean For Your Project

If there’s a buzz word right now in filmmaking and visual effects, it is probably ‘virtual production.’ Think of it like this: say you are making a live action film with characters. Traditionally what you would need is a camera, actors, and a location. With virtual production, the camera, actors, and location may instead be completely synthetic but the result, thanks to many recent technological developments, can still largely follow the ‘rules’ of live-action filmmaking.

Virtual production tends to be used to help visualize complex scenes or scenes that simply cannot be filmed for real. In general, though, virtual production can really refer to any techniques that allow filmmakers to plan, imagine, or complete some kind of filmic element, typically with the aid of digital tools. Previs, techvis, postvis, motion capture, vr, ar, simul-cams, virtual cameras, and real-time rendering – and combinations of these – are all terms now synonymous with virtual production.

Here’s Cartoon Brew’s primer on just some of the many instances where virtual production has been used of late, and how you can get your hands on some virtual production tools, too.

Pt. I: Virtual production in live-action films

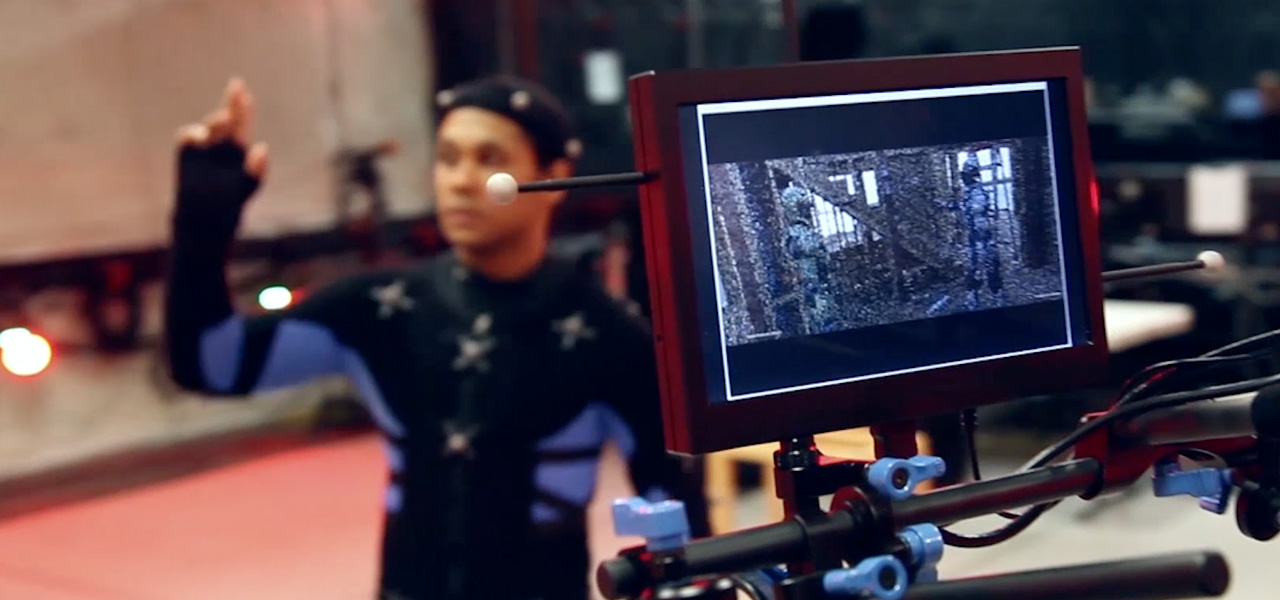

Virtual production tools in live action tend to relate mostly around the capturing of action, often with simul-cams or virtual cameras. These are effectively screens acting as virtual cameras that can be tracked just like motion captured actors are tracked, so that someone looking at the screen – a director – can see an almost-finished composed image of what the final shot may look like. The whole idea is to simply replicate what you might do with a live action action camera, but that you can’t do with such a camera because you might be crafting a synthetic world or synthetic characters that cannot be filmed for real.

But it’s not just about being able to visualize these synthetic worlds, it’s also about being able to change them quickly, if necessary. Which is why real-time rendering and game engines have become a big part of virtual production, and why real-time rendering and live-action filmmaking and visual effects have started to merge.

Several films with hugely fantastical worlds and characters have taken advantage of virtual production techniques to get their images on the screen. Avatar represented a huge leap forward in virtual production (and there are several sequels on the way). This year, Ready Player One relied on virtual production to bring its OASIS world to life. It’s in the OASIS where avatars of human characters interact. That virtual world was ‘filmed’ with motion captured actors using a game engine-powered real-time simul-cam set-up that allowed director Steven Spielberg to ‘find’ the right shots, since the sets the actors occupied had been pre-made in rough form digitally. He could also re-visit scenes afterwards, re-framing and re-taking scenes even after the performance was done.

Welcome to Marwen, coming out next month, also takes advantage of some virtual production techniques to realize its cg figurine scenes (the film’s director, Robert Zemeckis, previously pioneered several virtual production projects with The Polar Express and then with his Imagemovers Digital outfit). And next year, Jon Favreau’s The Lion King is reportedly relying on vr and virtual production techniques to re-imagine the Disney animated film as a completely cg movie.

One of the significant players in virtual production for film is Digital Monarch Media (DMM), which was recently acquired by Unity Technologies, makers of the Unity game engine. It’s no surprise that the companies are heavily intertwined: DMM relies on the game engine for its virtual cinematography offerings, which were used in The Jungle Book, Blade Runner 2049, and Ready Player One. You can see some of their tools in action in the video above.

And many other companies are establishing virtual production workflows, including MPC with its Genesis system, and Ncam, which has a augmented reality previsualization on-set system, something that was relied upon for the largely bluescreen-filmed train heist sequence in Solo: A Star Wars Story. Meanwhile, ILMxLab recently partnered with Nvidia and Epic Games to use Epic’s Unreal Engine to not only film a Star Wars scene with motion captured actors and a virtual camera, but also to introduce raytracing into the real-time rendered output. The result is the ‘Reflections’ demo below.

Pt. II: Virtual production in TV

If you’ve seen a weather presenter or newsreader interact with broadcast graphics during a television broadcast, then you’ve already seen one of the other major uses of virtual production. Such broadcasts are now incredibly common – they also came to the further attention of many audiences recently when The Weather Channel produced a visualization of a possible storm surge impact from Hurricane Florence. That work in particular relied on Unreal Engine.

Broadcast producers make use of virtual production often because they can control the environment being filmed in – typically a greenscreen studio with fixed lighting and cameras that can be easily tracked. There are several systems that allow for real-time graphics to be ‘overlaid’ over presenters and interact with them (Ncam is also a major player in this area).

Indeed, this work is often referred to as ‘virtual sets,’ and has found significant use in episodic television, too. The ABC series, Once Upon a Time, regularly showcased its characters amongst fantastical settings. To film those scenes with real actors, visual effects studio Zoic Studios utilized its Zeus virtual production pipeline. It involved filming scenes on a greenscreen stage where digital sets had been pre-built and matched with real-time compositing and on-set camera tracking. Virtual environments were also rendered in real-time during the shoot, and piped to monitors and a ‘simul-cam,’ which was actually a specially designed iPad.

Pt. III: Virtual production in previs

Previs is perhaps the ultimate form of virtual production. It’s all about imagining action, camera angles, and camera movement before anything is filmed, or before anything is tackled as final visual effects or animation.

More and more, previs studios tend to build digital sets to match the dimensions of the real ones, with the previsualized action inside those sets able to be played back during filming or even overlaid as a real-time composite to the physical set. The types of cameras and lenses, lighting, mood, and look, can all be imagined in previs ‘virtually,’ first. Previs studios, too, have moved into using virtual production tools such as motion capture, simul-cam set-ups, and real-time game engines to deliver their work.

Pt. IV: The future of virtual production, and your project

Virtual production is clearly something the big films and the big television shows are using. But is it something that smaller, independent filmmakers can take advantage of?

Blur Studio director Kevin Margo is among a number of filmmakers who presents a useful working example. He recently released his fully-cg Construct short which utilized virtual production techniques to tell a story of repressed robot construction workers. The short was Margo’s own exploration into virtual production, as well as GPU rendering options, including with Chaos Group’s V-Ray. Many of the filming techniques used had also been regularly relied upon for Margo’s game cinematics work at Blur. The video below showcases some of his virtual camera approaches.

The two major game engine producers, Unreal and Unity, are certainly pushing virtual production. It’s their engines that are the backbone of many real-time rendering and virtual set production environments. It’s important to note for independent filmmakers that these engines are generally available for free, at least in terms of getting started.

So, where can you look for further information on getting into virtual production? Here’s a few starting points:

- Unreal has just launched a dedicated hub for virtual production

- Unity has a dedicated page on its website for filmmaking aspects of its game engine

- Dutch startup Deepspace is concentrating on delivering virtual production services mostly for previs

- Markerless motion capture suit maker Rokoko has a virtual production kit available, that incorporates its Smartsuit Pro, a SteamVR tracking system, and other hardware and software to help realize scenes virtually

- The Institute of Animation of German’s Filmakademie Baden Württemberg has been working on virtual production tools for a few years, and has released an open-source tablet-based on-set editing application called VPET