See The Results Of This Animator’s Homemade Motion Capture System Using iPhone X

The Apple iPhone X is turning out to be a much more powerful tool for animation artists than anyone could have imagined.

Thanks to the phone’s True Depth system on its front-facing camera, as well as Apple’s ARKit API, which includes 52 different facially-tracked motion groups, animators can turn the phone into an ad hoc performance capture system.

A couple months back, we shared a facial capture test made by a Taiwanese artist. Now, Cory Strassburger of the L.A.-based digital studio Kite & Lightning has taken the concept to the next level with a series of tests that he’s posted on Youtube that use the iPhone X, a custom app he built for the phone, a homemade helmet rig, and the Xsens MVN Link performance capture suit.

He explained in the video notes that he wanted to see whether the iPhone X “can be used for cheap & fast facial motion capture for our vr game which is over run by wild and crazy immortal babies who all want to express themselves.”

Strassburger’s first test is a rough facial capture test using a work-in-progress rig from his upcoming vr project Bebylon: Battle Royale:

In a second test, Strassburger added eye tracking, head motion, and improved blendshapes, and also imported the recorded facial animation data from the iPhone X into Maya. In response to a Youtube commenter who asked how the facial data was extracted from the phone, Strassburger wrote:

Getting the data is pretty easy, I used Unity which has hooks into Apples ARKit and outputs 51 channels of blendshape data@ 60fps. I imported a WIP character head from our game (with the right blendshapes that ARkit is looking for), hooked the 51channels of data to it (So i can visualize the character while capturing), then wrote a way to record the data to a text file locally on the iPhone. (This could be easily streamed over USB but then you loose the mobility) From here, copy the file from the phone and run it through a converter I wrote to parse the text file into a Maya .anim format which imports nicely into Maya and drives the same blendshapes as Unity did. No need for a Maya plug-in!

For more on how Strassburger created the DIY helmet rig, see this piece at Upload VR.

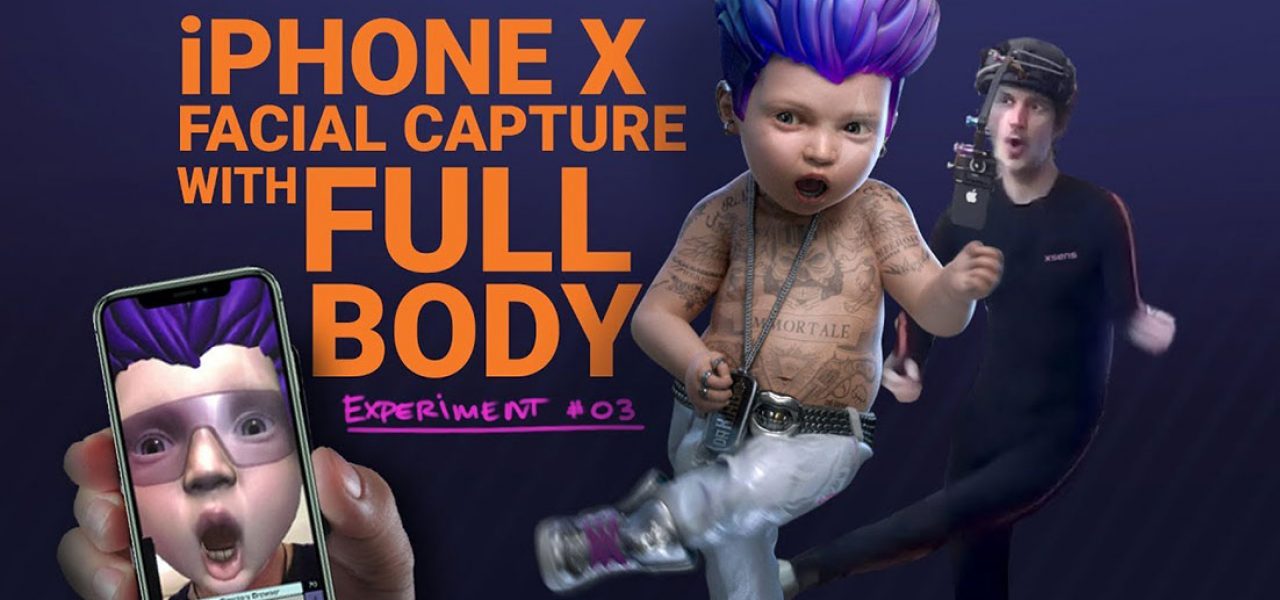

In his most recent test, Strassburger combines the facial capture with full body capture using the Xsens bodysuit:

The quality of Strassburger’s demos also suggest tantalizing possibilities beyond motion capture, especially in the realm of augmented reality. For instance, the ability to conduct real-time video chats as a convincing animated character doesn’t seem to be too far off.

(h/t, Daniel Colon)

.png)