Real-Time Animation Software Adobe Character Animator Adds New Features In Public Beta

Character Animator, a software packaged with Adobe’s Creative Cloud suite, is getting an update. A host of new features coming to the software can now be trialed in public beta mode.

Since its launch five years ago, Character Animator has been increasingly used by studios looking to harness its real-time animation capabilities. So far, the software has enabled users to control animated characters in real time by mouse or keystrokes, but also through their facial expressions and dialogue. It has been used to speed up traditional pipelines and stage live animation — remember that skit with Homer Simpson?

The latest version is more accurate and versatile than before. Here’s a list of new and improved features, as described by Adobe:

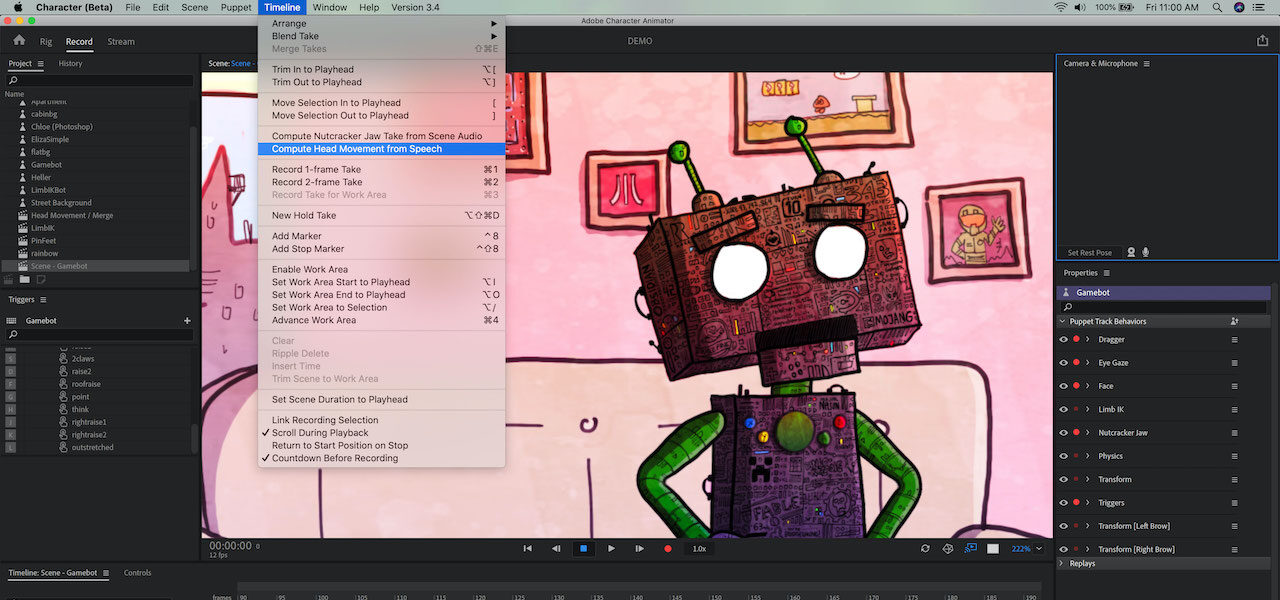

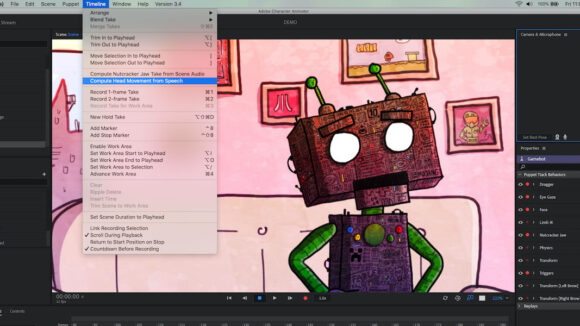

- Speech-Aware Animation uses the power of Adobe Sensei to automatically generate animation from recorded speech and includes head and eyebrow movements corresponding to a voice recording. Speech-Aware Animation was developed by Adobe Research and previewed at Adobe MAX in 2019 as Project Sweet Talk. The feature requires Windows 10 or MacOS 10.15

- Limb IK (Inverse Kinematics) gives puppets responsive, natural arm and leg motion for activities like running, jumping, and dancing across a scene. Limb IK controls the bend directions and stretching of legs, as well as arms. For example, pin a hand in place while moving the rest of the body or make a character’s feet stick to the ground as it squats in a more realistic way.

- Timeline organization tools include the ability to filter the Timeline to focus on individual puppets, scenes, audio, or key frames. Takes can be color-coded, hidden, or isolated, making it faster and easier to work with any part of your scene. Toggle the “Shy button” to hide or show individual rows in the Timeline.

- Lip Sync, powered by Adobe Sensei, has improved algorithms and machine learning, which now delivers more accurate mouth movement for speaking parts.

- Merge Takes allows users to combine multiple Lip Sync or Triggers into a single row, which helps to consolidate takes and save vertical space on the Timeline.

- Pin Feet has a new Pin Feet When Standing option. This allows the user to keep their character’s feet grounded when not walking.

- Set Rest Pose now animates smoothly back to the default position when you click to recalibrate, so you can use it during a live performance without causing your character to jump abruptly, for example if you inadvertently move your face out of the frame.

Adobe is pitching Character Animator as a time-saving tool for animation producers as they continue to work through the disruptions of the pandemic. It offered the beta features to Nickelodeon, which used them to produce the half-hour special The Loud House & The Casagrandes Hangin’ At Home in one week in May.

“To help make this happen remotely,” says Rob Kohr, director of animation and vfx, creative and design at Nickelodeon, “we used the Character Animator beta lip-sync tool, which saved our animators a ton of time by providing a much more natural lip-sync and allowing the team to work more collaboratively.”

Other productions to have used the features already include ATTN’s Your Daily Horoscope, which streams on Quibi, and CBS All Access’s Tooning Out the News. Earlier this year, the software won a technical Emmy “as a pioneering system for live performance-based animation using facial recognition.”

Character Animator is available for download via the Creative Cloud desktop application, including both the release version and the public beta.

.png)