How One Animator Is Making His Own CG Series With Unreal Engine

Back in 2015, Epic Games encouraged artists to use its Unreal Engine for animation projects. The company showcased what was possible in a short called A Boy and His Kite, and many were surprised by the high level of visuals possible for a game engine, and the opportunities that real-time rendering could also provide from a workflow point of view.

New Zealand animator Peter Monga was one of those artists inspired by the Epic Games short. What’s more, he saw a way to make an animated preschool series by himself and without the need for a renderfarm.

That show idea, still in development, became Morgan Lives in a Rocket House, which Monga is making with Unreal Engine 4. Cartoon Brew asked the animator about working solo with the game engine and the lessons – good and bad – he’s learned so far in devising an entire series and a planned vr version of the show.

Searching for the right show idea

Monga had a desire to create his own television show at the end of 2014. At the time, he had had experience on several Nickelodeon cg animated series while working at Oktobor Animation in Auckland, and then as an animation director on shows including Penguins of Madagascar, Kung Fu Panda: Legends of Awesomeness, and Robot and Monster.

Oktobor Animation closed in 2013, and Monga moved on to freelance animation projects, including several commercials. This gave him time to pursue the idea of producing his own show, something he planned to do entirely alone or with a very small team. With a love of old preschool stop-motion animated shows like The Magic Roundabout and Pingu, Monga looked into how the settings and ideas in those could be accomplished with modern tools.

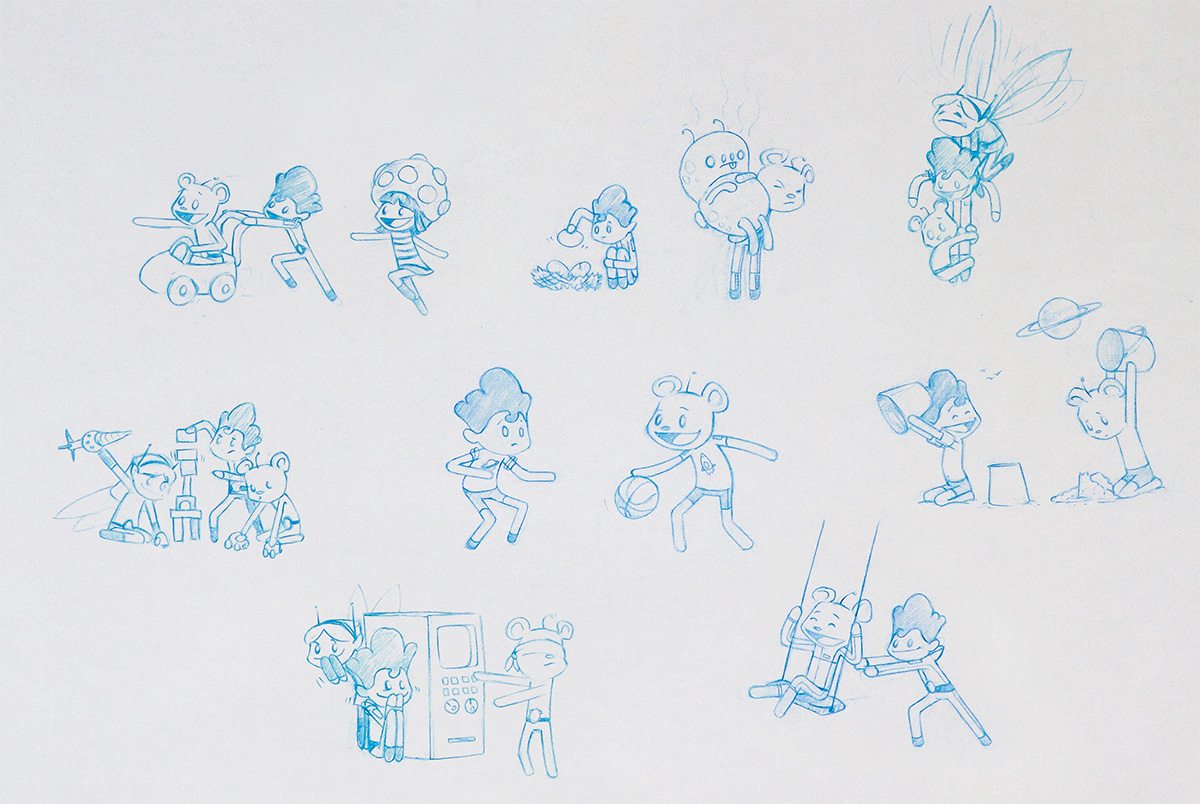

“I came up with a set of rules out of that research,” Monga told Cartoon Brew. “Characters should be easy to animate. This is why my characters in Morgan ended up with no hands, feet, or pupils. My show was also stop-motion inspired, so another rule was: only animate at 12 fps.”

Added Monga: “It would be narrated, which meant no lipsync and only one voice actor would be needed. There would be one self-contained set or world where all the action takes place, that is, no adventuring to distant lands. There’s also a limited cast of characters and no new episode specific characters. There’s a limited number of new props per episode, although I didn’t really stick to this rule. And finally I tried to keep character seconds low, i.e., fewer characters on screen at once.”

Morgan Lives in a Rocket House would ultimately be about a child, Morgan, marooned on an alien planet light years from Earth. It’s here that Morgan befriends Elliot the Space Bear and Synth the Space Fairy. The setting and characters arose from brainstorming sessions that fit into the loose themes, as Monga describes it, of “space and the future, bottom-of-the-garden, prehistoric, fantasy, animals, and toys.”

Choosing Unreal Engine

Monga had experimented with real-time rendering while at Oktobor, but for Morgan he had actually initially planned on utilizing Maya’s Viewpoint 2.0 (which would provide real-time playback and alleviate the need for an offline renderer). Then he saw Epic’s A Boy and His Kite and discovered that Unreal Engine 4 would be available for free. “I felt the visuals in that short were far beyond what I was originally envisaging for my project, so I jumped straight in,” he said.

Although Morgan is rendered in real-time in Unreal Engine 4, the project does rely on other software. Maya is still the basis of modeling and animation, with Photoshop used for texturing. Monga crafts his animatics in After Effects and that software is also relied upon for some post effects such as camera flashes. Editing is handled with Premiere. In terms of hardware, Monga makes the show running an i7 6700K processor with a GeForce GTX 1070 graphics card. He also uses a Wacom Intuos4 tablet.

Where Unreal comes in is allowing Monga to ignore the lighting, rendering, and compositing steps in the traditional animation pipeline. “What I output from Unreal is essentially what ends up on screen,” said Monga. “All color grading and post processing can be done in the engine. Higher grade effects that I originally thought would be too cost prohibitive are easily achieved – things like particle effects, depth-of-field, global illumination, high quality ambient occlusion, reflections, and refraction.”

“Essentially,” added Monga, “Unreal allows me to achieve a high-quality final image with much less time than a usual pipeline. Sure, I could probably get a higher fidelity image from offline rendering, but Unreal can produce images that are more than good enough for what I am looking for. And not having to wait for renders not only saves time, but also equipment and power usage.”

Lessons learned

So far, Monga has created a two-minute trailer and three 5-minute episodes of the show. He says animation takes him around four or five weeks for a 5-minute episode. Once animation on an episode is done, it then takes just three days to have a final rendered show out of Unreal. “The episodes are about 80 shots each,” said Monga. “There’s no way I’d be able to light, render, and comp 80 shots in three days using my old pipeline.”

As Monga has made each episode, he’s taken away some valuable lessons in using Unreal. The first is that there still needs to be several steps done in pre-production to ensure a smooth workflow. “You need to transfer your assets from whatever animation package you are using into Unreal,” said Monga, “and set up shaders for each character or prop, which I suppose isn’t that different from having to create shaders for a normal renderer.”

Monga also notes he needs to pre-light the environment and bake out the lighting, and this can take time (the small set in Morgan takes around 30 minutes to bake). But, of course, once it’s baked, it’s done, and he can re-use the environment for every other shot in the show. If he needs to alter the lighting for a shot, such as adding in an extra fill light just for characters, Monga uses a dynamic light which does not require baking.

It’s in actual animation that Monga says he encountered the most challenges in making Morgan. “Importing the animation file, assigning it to the correct asset, and setting up Sequencer can be a bit tedious. Sequencer is Unreal’s non-linear animation system that handles animation files similar to how Final Cut or Premiere handles video clips. It’s not too bad, but it is menial work rather than creative. Maybe it’s possible to write a plugin to automate the process a bit more, but it would be beyond my coding abilities.”

The state of play for Morgan

In addition to the trailer and episodes created so far, Monga has also generated a four-minute vr episode. That’s something that he saw as being possible since the cg assets were already in Unreal Engine. The process, however, was even easier than Monga had imagined.

“Because vr is very computationally intensive, I thought I would need to manually down-res all my textures and models,” Monga said. “But Unreal has a built-in LOD (level of detail) system which allows you to reduce the polycount and texture resolution automatically. So I didn’t have to alter my models at all, I just set up the LOD system, which was pretty easy. “

The vr episode does not have any cuts, a structure that Monga initially found difficult to deal with. “I had to find ways to get the viewer to look where I wanted, rather than just being able to cut to what I want them to look at. I think I managed to pull it off using contrasting movement, characters’ facing direction, characters pointing, and positional sound.”

The episode was blocked out in Unreal’s VR editor, a mode that enables a user to work in the editor in vr. Monga then drew a playbook-style game plan of where he wanted the characters to be, on which the final animation layout was based. Again, animation proved tricky. “VR runs at 90 fps so I couldn’t animate at 12 fps because that looked much too stuttery,” said Monga. “The illusion created by the persistence of vision breaks down in vr. So I animated at 24 fps then did an extra polish pass at 48 fps to make sure it looked smooth at high frame rates. In retrospect I should have animated at 30 fps, a multiple of 90, so the frames matched up correctly.”

Monga hopes to soon release the vr episode as an experience on Steam and the Oculus Store, as well as a rendered stereo 360-degree video on YouTube.

Meanwhile, for now, Morgan’s more traditional episodes are being put up progressively on YouTube. Monga ultimately plans to partner with a studio to build a full season of the show. “I think my main goal with making a show was really just to see if I could do it,” he said. “I wanted to see if I could put together a pipeline that allowed me to create a high-quality product with a small-scale team. I think the project is already a success in that respect.”