Could These Be The Next High-Tech Tools That Animators Use Daily?

The peer-reviewed technical papers to be presented at this year’s SIGGRAPH conference in Vancouver in August have been announced, and several may give a glimpse of the tools animators could soon be utilizing in their work in the near future.

Indeed, technologies shown at SIGGRAPH (which stands for the Special Interest Group on Computer Graphics and Interactive Techniques) do often go on to be incorporated into real-world tools, or help enable further research.

This year, among other technical papers on rendering, virtual reality, and computer graphics simulations, there are a few stand-outs in animation. Here’s Cartoon Brew’s preview.

Synthesizing the cartoony feel

The first paper to look out for involves a method for synthesizing hand-colored cartoon animation. The authors, who hail from Czech Technical University in Prague and Adobe Research, call this ‘ToonSynth.’ They’ve developed a tool to preserve the stylized motion of hand-drawn animation without artists having to draw every frame.

From the abstract for the paper:

In our framework, the artist first stylizes a limited set of known source skeletal animations from which we extract a style-aware puppet that encodes the appearance and motion characteristics of the artwork. Given a new target skeletal motion, our method automatically transfers the style from the source examples to create a hand-colored target animation. Compared to previous work, our technique is the first to preserve both the detailed visual appearance and stylized motion of the original hand-drawn content.

The authors suggest ToonSynth has practical application in traditional animation production and in content creation for games. Go HERE to see more about ‘ToonSynth: Example-Based Synthesis of Hand-Colored Cartoon Animations.’

Aggregating drawing strokes

In the paper, StrokeAggregator: Consolidating Raw Sketches into Artist-Intended Curve Drawings, the authors have developed a tool that takes manual artist strokes – i.e. rough pencil drawings – and turns them into a cleaned-up figure designed to look like what the artist imagined. In other words, it’s automated clean-up for 2d animators. The idea and execution are best described in the video, below.

Motion capturing a person wearing clothing, with no markers

The next paper, ‘MonoPerfCap: Human Performance Capture from Monocular Video,’ presents a unique approach to motion capture. Here, the researchers have been able to reproduce the motion of a person wearing clothing by filming them with a single camera and no markers, and they’ve been able to replicate aspects of the deforming clothing and body parts in doing so.

Their paper abstract states:

Human performance capture is a challenging problem due to the large range of articulation, potentially fast motion, and considerable non-rigid deformations, even from multi-view data. Reconstruction from monocular video alone is drastically more challenging, since strong occlusions and the inherent depth ambiguity lead to a highly ill-posed reconstruction problem. We tackle these challenges by a novel approach that employs sparse 2D and 3D human pose detections from a convolutional neural network using a batch-based pose estimation strategy.

Read more about ‘MonoPerfCap’ here.

Natural animation via neural networks

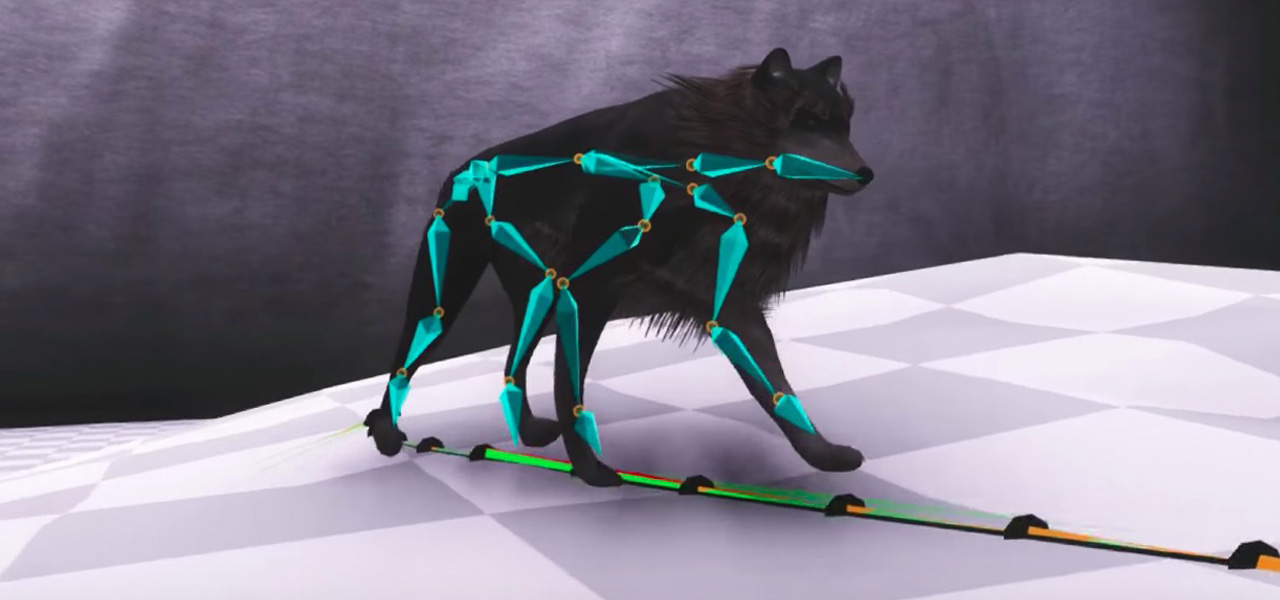

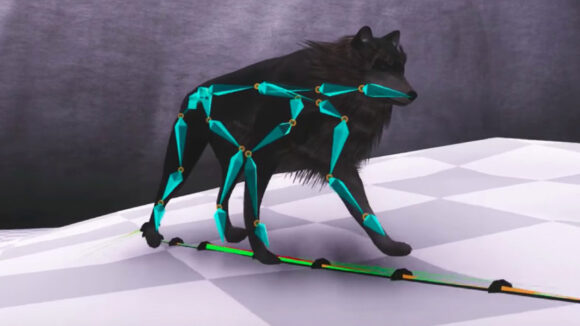

Neural networks are a key part of the MonoPerfCap paper, above, and they’re also reflected in a recent history of technical papers that use artificial intelligence and deep learning to aid in simulations, animation, and processing. This third paper is all about a new method for synthesizing the animation of quadrupeds based on neural networks. The authors have ‘trained’ their system based on unstructured motion capture, meaning they haven’t had to describe it any particular way – the system ‘learns’ the motion.

Here’s some more detail from the abstract to the paper, titled, ‘Mode-Adaptive Neural Networks for Quadruped Motion Control’:

The system can be used for creating natural animations in games and films, and is the first of such systematic approaches whose quality could be of practical use. It is implemented in the Unity 3D engine and TensorFlow.

All of the technical papers are listed at SIGGRAPH’s website. Others to perhaps look out for are ‘An Empirical Rig for Jaw Animation’ from Disney Research, which proposes a new jaw rig for animated characters, and ‘Fast and Deep Deformation Approximations’, which includes contributions from Dreamworks Animation and deals with deep learning methods to help make character rigs for film-level animated characters run on consumer-level devices.

And if you’re attending the conference in August, one of the most fun sessions is the Technical Papers Fast Forward, where authors have 30 seconds each to try and entice attendees to come to their presentation later in the week.

.png)