How ‘Welcome to Marwen’ Mixed Animation And Live-Action To Turn Steve Carell Into a Doll

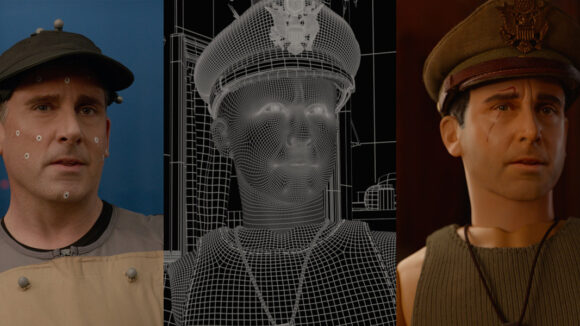

Welcome to Marwen, directed by Robert Zemeckis, tells the story of assault victim Mark Hogancamp (Steve Carell) who builds a miniature World War II village to deal with his traumatic experience, complete with accompanying miniature dolls that represent live-action characters in the film.

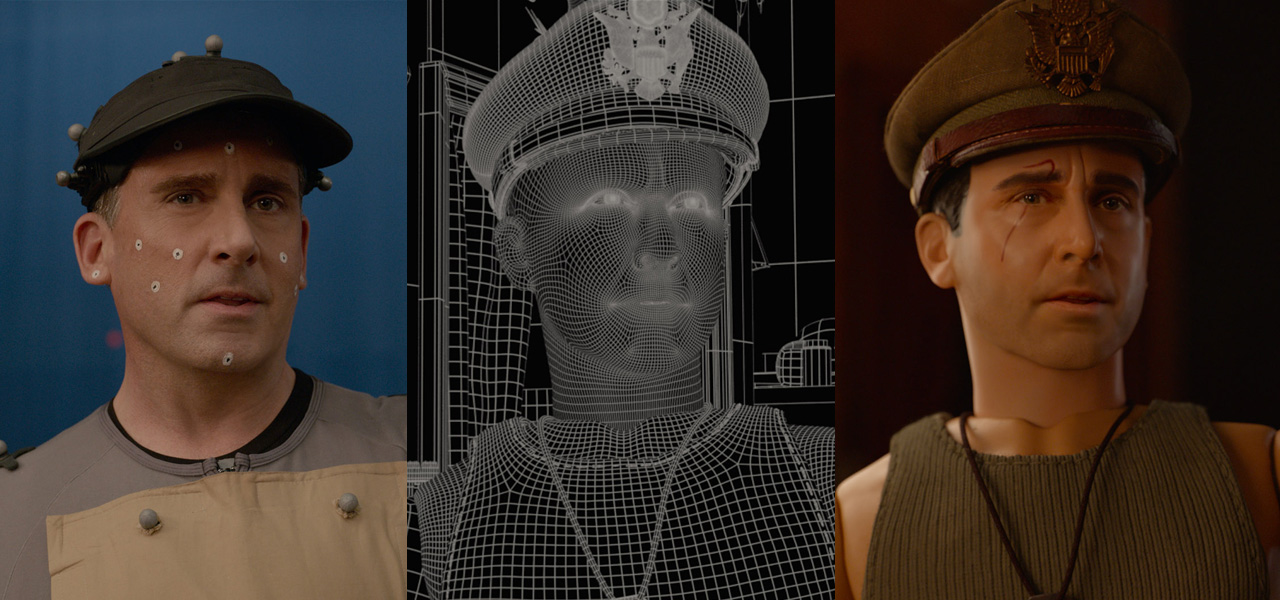

Although those dolls come to life as cg figurines, they were not completely digital creations. Zemeckis was adamant, given his previous experience with motion capture films, that the dolls – and particularly their faces – be realized in a more life-like manner and one that didn’t involve the lengthy process of translating facial capture into a final performance.

Called upon to find a solution was production visual effects supervisor Kevin Baillie, who worked with Zemeckis to actually film and then transpose the real faces of the actors onto the dolls using a virtual production workflow. Baillie’s studio Atomic Fiction (now part of Method Studios) carried out the lion’s share of the cg work, with Framestore also contributing.

First, a test

Baillie told Cartoon Brew that, in the early stages of pre-production, he and Zemeckis considered several ways in which the dolls and their faces could be achieved. These included actually shooting live-action actors and augmenting them with digital doll-like joints, and then using compositing methods to give them a final ‘heroic’ action figure physique. Other methods considered were full motion capture – body and face – for a fully-cg doll model. To help them make a decision, a faux shoot with Steve Carell was planned and undertaken like a screen test.

“It was filmed about a year before we actually started principal photography on the film, with Steve Carell in costume under lighting as if he was doing a makeup test for a live-action movie,” said Baillie. “At the time, we thought that the most likely methodology that we were going to use was going to be digitally giving him a heroic doll physique, using compositing based methods and augmenting him with digital 3d tracked in doll joints.”

The results from that initial test were disastrous.

“The first time we had a work in progress of that test for Bob to look at, we all agreed that it looked absolutely horrific,” recalled Baillie. “It looked like Steve Carell in a very high-end Halloween costume. There’s just something about the way that humans move and how skin stretches between joints that just didn’t look like a doll at all, even if his joints themselves looked like doll joints.”

The team moved on to consider full motion capture, but using the live-action test as input. Artists hand roto-mated and hand-tracked about 2000 frames worth of Carell’s performance, as well as that of Leslie Zemeckis (who plays Suzette in the film), as if the actors’ bodies had been motion captured. The faces were hand-animated, as if there had been head-cam motion capture.

Again, the results were considered sub-optimal. “Bob immediately responded to it as if he was seeing an animation take from his mocap film, A Christmas Carol,” said Baillie. “I had worked with him on that film back in 2007 and 2008. I could just see him just going back into the same place of like, ‘Oh my gosh, we’re going to have to do so much work to get the actors’ faces to really read the soul of the actor.’ There’s something in that motion capture-driven process where there’s a little bit of soul of the actor that’s lost. He just said, ‘I can’t suffer that again on this movie. If this is all we can do, I just can’t do it like this.’”

Baillie went back to the drawing board, but soon the filmmakers had what he calls an ‘a-ha moment.’ Having realized there was now in existence essentially a motion capture version of Carell’s doll (called ‘Hoggie’) in terms of body motion, it was also apparent to the team that live-action footage of Carell from the test also existed that matched exactly to the body motion – in movement and in lighting.

“So we thought, ‘Why don’t we just use little pieces of Steve Carell? Let’s use his eyes and his mouth from the footage, combine it with this perfectly believable cg doll body and see if we can get the soul of Steve Carell to show through in his Hoggie character that way.’ It was, however, a long road from that a-ha moment to actually presenting a final proof of concept.”

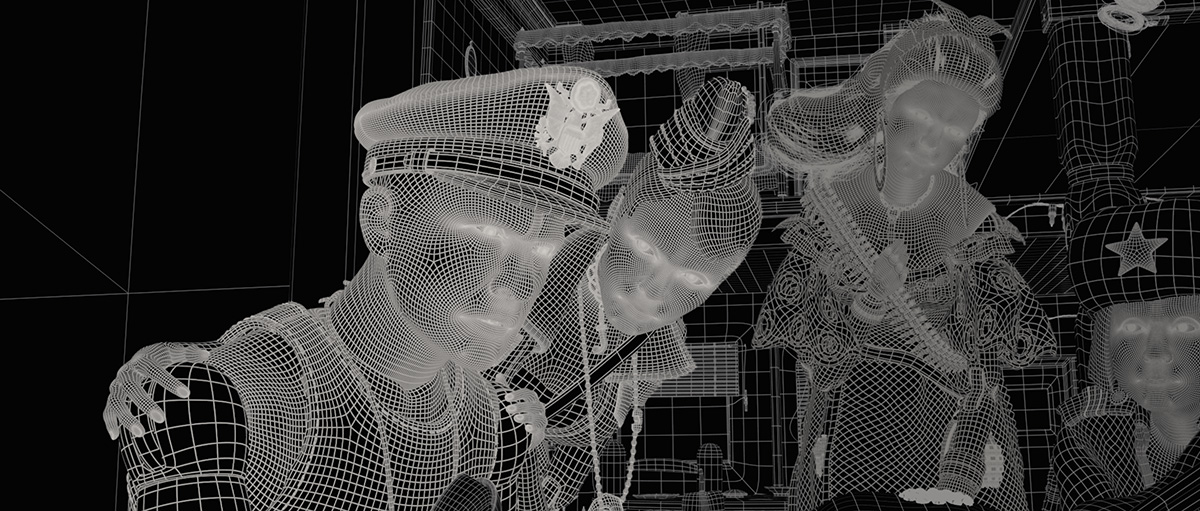

To get there, vfx artists from Atomic Fiction had to work out ways to re-project the real footage of Carell’s face onto a cg proxy face, and in particular have the footage of the eyes and mouth line up properly with the cg body and head. Then this needed to look like a plastic doll and not have a human skin texture. “Once we got there,” said Baillie, “we had developed a process that not only produced a test to help get the movie greenlit, but also served as a baseline for establishing how we would do all 17 doll characters that ended up in the movie.”

Marwen methodology

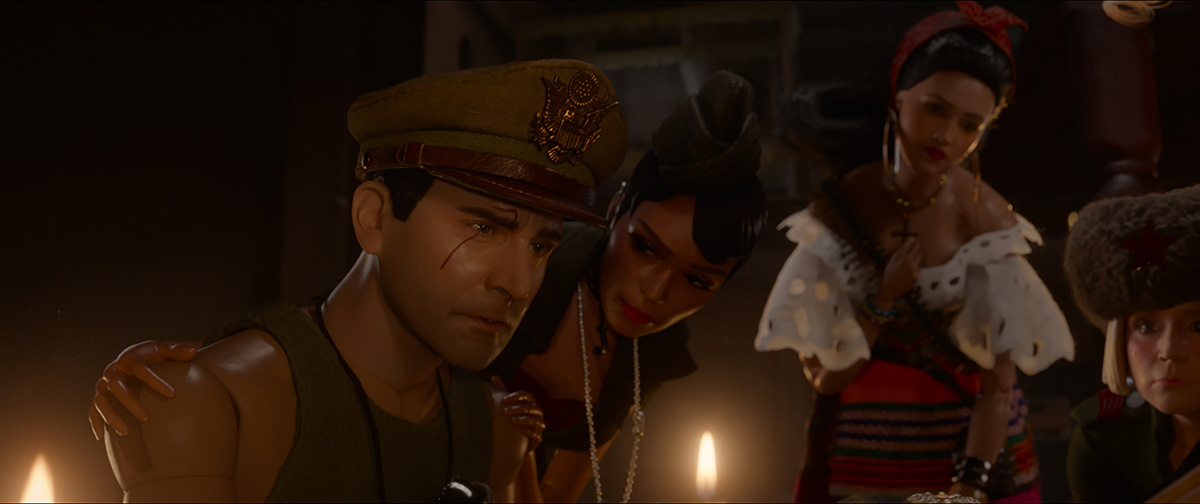

Attention then turned to crafting final shots for the film, a process that drew directly upon the benefits of virtual production. This involved motion capturing the actors in a dedicated capture volume set up in Vancouver by Profile Studios. At the same time, the actors were lit as if they were being filmed for a real shot, and then also captured with cinema cameras (the same Arri Alexas used for the live-action portions of the film).

“At the end of the day,” said Baillie, “from the motion capture stage, we had motion data for the bodies of the actors, we had information for where the cameras were and what they were seeing, and we had footage of the actors’ faces that was ‘beauty lit’ that was filmed from the exact camera angles that the final shots from the movie were going to be filmed on.”

In order to light the motion capture stage properly – and match it to what would be done in live-action – the cinematographer, C. Kim Miles, went through a process of pre-lighting every scene in the film before any motion capture had been filmed. This was made possible by generating a real-time cg asset of the Marwen town in which the dolls exist, which based on the actual miniature of the Marwen town that had been built by the production designer, Stefan Dechant, and his team as well as Dave Asling from miniatures house Creation Consultants. Through an iPad app and engineering via Unreal Engine, Miles could interactively light the town, adjust sun positions, and make other virtual cinematography decisions.

“That enabled us,” said Baillie, “to know that when we showed up on the motion capture stage, C. Kim Miles could light the characters exactly as he planned. He basically had a blueprint for how the lighting was going to be set up. So that enabled him and his team to work quicker on the mocap stage, because they already knew what they were going to be doing before they got there that day. And what it also allowed us to do was show Kim and Bob in real-time with moving doll characters, in a world that is representative of what the final Marwen town was going to look like, how his lighting actually looks in the town, rather than just guessing at it in a gray void of a mocap stage.”

Armed with that live-action footage and the motion-captured actor bodies, the visual effects team could then get to work on re-projecting the real faces onto their cg doll counterparts. Each actor had been previously laser scanned, and the resulting scan used to help sculpt a digital doll head. These scans also informed the actual physical dolls used on set. Animators hand key-framed facial performances onto the cg doll characters using the live action as reference (body animation would involve coming up with doll-like movements).

Vfx artists, furthermore, carried out precise object and body tracks of the live-action footage. The live-action footage of the real actor’s face and head was projected onto the cg doll’s head. A proprietary process was then utilized to ‘plasticize’ the head, which essentially de-aged and smoothed out the look to make it look like a figurine with an appropriate plastic sheen. “The end result,” said Baillie, “was that the facial performances have every nuance of the actors’ real performances, but they look perfectly integrated, as if they are part of the rest of this plastic doll.”

VFX that works, and on a budget

One reason Baillie and his team chose that particularly methodology for Welcome to Marwen’s dolls was the relatively restrained budget for the film. “If we had had $150 million to make this movie,” says the visual effects supervisor, “we would’ve been very tempted just to dump tens of thousands of man hours into animating faces that ultimately wouldn’t have looked as good as this methodology that we came up with. But we only had a fraction of that budget.”

Instead, taking advantage of live-action footage and the virtual production approach was able to accommodate both budget and authenticity. “I think Welcome to Marwen and the process we used to make it is a poster child for what virtual production can be and how it can help to involve every department on the physical production of the film,” said Baillie. “It was very, very cool to see how well it all worked.”