SIGGRAPH Previews 2012 Emerging Technologies

Chicago, IL — SIGGRAPH 2012 Emerging Technologies provides attendees an exclusive hands-on opportunity to interact with the newest developments in multiple fields including 3D displays, robotics, interactive input devices, and more.

SIGGRAPH 2012, August 5-9 at the Los Angeles Convention Center, welcomes 26 of the latest innovations selected by a jury of industry experts from 99 submissions. Countries represented include Japan, U.S., Hungary, Canada, Singapore, South Korea, and China.

“Emerging Technologies demos allow attendees to directly experience novel systems. They provide a forum for presenters to showcase technology innovations and new interaction paradigms,” said Preston Smith, SIGGRAPH 2012 Emerging Technologies Chair from Laureate Institute for Brain Research. “Each one has an interactive component that is best experienced in person and involves either new technology or a novel use of existing technology. In many cases, these technologies won’t be seen by the general public for another three to five years and are right out of a research lab.”

Featured highlights from SIGGRAPH 2012 Emerging Technologies:

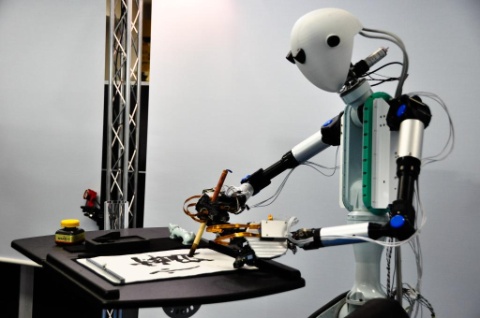

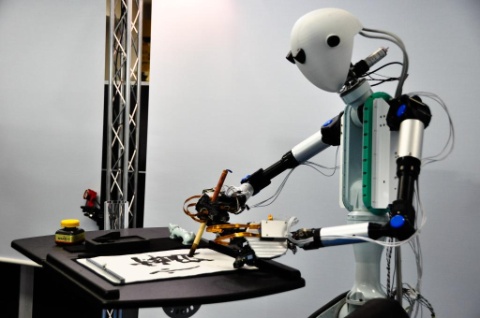

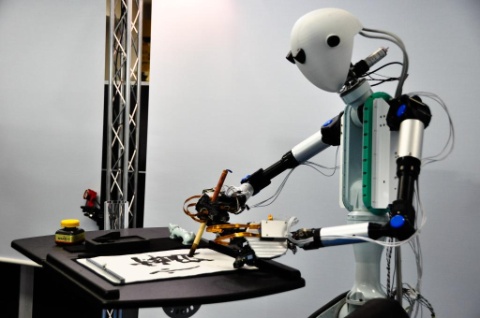

TELESAR V: TELExistence Surrogate Anthropomorphic Robot

Charith Lasantha Fernando, Masahiro Furukawa, Tadatoshi Kurogi, Kyo Hirota, Katsunari Sato, Kouta Minamizawa, and Susumu Tachi, Keio University, Graduate School of Media Design; Sho Kamuro, The University of Tokyo

TELESAR V enables a user to bind with a dexterous robot and experience what that robot can feel with its fingertips when manipulating and touching objects remotely.

Chair Feedback: “Historically, robots have always been a popular Emerging Technologies attraction. This trend continues with the intriguing robotic presence of TELESAR V, a fifth generation robot that gives the user both the control of the robot and the experience that the robot is going through. Imagine being able to remotely perform some task, but also being able to feel the task that is being performed. The future applications are endless in the entertainment, science, or medical fields.“

3D Capturing Using Multi-Camera Rigs, Real-Time Depth Estimation, and Depth-Based Content Creation for Multi-View and Light-Field Auto-Stereoscopic Displays

Peter Tamas Kovacs, Holografika Kft., and Ferederik Zilly, Fraunhofer Heinrich-Hertz Institut

This futuristic system captures live HD footage with a wide-baseline multi-camera rig and estimates the depth map of the captured video streams based on which content is generated and presented on auto-stereoscopic 3D displays.

Chair Feedback: “Prepare to see something big this year at Emerging Technologies. We have a two-fold exhibit with an innovative camera rig for 3D capture, along with the largest (to date) auto-stereoscopic light-field 3D display! The presentation of this new rigging system is exciting enough, but you have to be able to experience this technology to fully appreciate it. Then witness the display of this amazing output on the impressive 140″ (diagonal screen) glasses-free multi-view and light-field auto-stereoscopic 3D display. That is something to you really have to experience in person!”

Tensor Displays

Matthew Hirsch, Douglas Lanman, Gordon Wetzstein, and Ramesh Raskar, MIT Media Lab

This new light-field-display technology system uses stacks of time-multiplexed, attenuating layers illuminated by uniform or directional backlighting optimized with non-negative tensor factorization. Tensor displays achieve greater depths of field, wider fields of view, and thinner enclosures compared to prior auto-multiscopic displays.

Chair Feedback: “’Auto-multiscopic displays’ is certainly a mouthful. But saying ’3D without glasses‘ is well understood by all. Tensor displays are an exciting display technology and is the next logical step in our ever-growing home theater experiences. It allows the user to have a 3D experience via the display but without the assistance of glasses or other such wearable devices.”

Gosen: A Handwritten Notation Interface for Musical Performance and Learning Music

Tetsuaki Baba, Tokyo Metropolitan University; Yuya Kikukawa, Toshiki Yoshiike, Tatsuhiko Suzuki, Rika Shoji, and Kumiko Kushiyama, Graduate School of Design, Tokyo Metropolitan University

Since the 1960s, Optical Music Recognition (OMR) has matured for printed scores, but research on handwritten notation and interactive OMR has been limited. By combining notation with performance, Gosen makes music more intuitive and accessible.

Chair Feedback: “Music is something that everyone enjoys and is a universal language. Yet one aspect that is less understood is the technical perspective of writing and reading music scores. This is an innovative device that can read any handwritten score and play that music. It can even follow written instruction as far as what instrument is to be played. This will change the way children and adults learn, create, and interact with music.”

BOTANICUS INTERACTICUS: Interactive Plants Technology

Ivan Poupyrev, Disney Research, Pittsburgh and Philipp Schoessler, University of Arts Berlin

BOTANICUS INTERACTICUS is a technology for designing highly expressive interactive plants, both living and artificial. With a single wire placed anywhere in the soil, it transforms plants into multi-touch, gesture-sensitive, and proximity-sensitive controllers that can track a broad range of human interactions seamlessly, unobtrusively, and precisely.

Chair Feedback: “It is not a far stretch to imagine a computer being used to time the watering of plants. But how about using the plant as the actual interface to the computer? Imagine being able to use a plant on your coffee table to fast forward a movie. Lost the remote? Go ahead and use the plant. This is a novel application of a haptic device in a new and inventive way to manipulate technology. Future applications could be in the home or entertainment industry.”

Ungrounded Haptic Rendering Device for Torque Simulation in Virtual Tennis

Wee Teck Fong, Ching Ling Chin, Farzam Farbiz, and Zhiyong Huang, Institute for Infocomm Research

This interactive virtual tennis system utilizes an ungrounded haptic device to render the ball impact accelerations and torques experienced in real tennis. Novel features include the A-shaped vibrating element with high-power actuators. The application utilizes racket mass distribution and high-amplitude bending for torque generation and haptic rendering.

Chair Feedback: “Many of our current electronic gameplay devices have done away with controllers. However, being able to receive certain feedback in gameplay is helpful for learning and also enhances the entertainment value. Learning tennis could be difficult if you expected to get a similar experience of playing a digital game with no controller and then made the transition to playing the real sport outside with a racket. This clever advancement provides the torque and impact of a real tennis match.”